Efficient Logging and Alert Management

Streamlining Data Pipeline Monitoring

In Matillion ETL instance, inbuilt audit log provides a comprehensive view of all the details to a job. Matillion’s audit log captures all modifications made to a job, ensuring transparency and accountability. Each component in Matillion offers valuable information such as runtime, success/failure, and messages in the form of Automatic Variables.

Our primary use case revolves around obtaining critical insights like job duration, success/failure status, and error messages, create a Logging and Auditing system out of it.

Additionally, we are also creating an Alert Mechanism that sends email for each workflow/job run in Matillion pipelines scheduled. It is vital to receive timely alerts via email about the status the job, whether it is a success or a failure.

Both are quite essential and something that makes the data infrastructure robust, an immense value addition to Data Architecture.

This will dive into two mechanisms that work in tandem within a single job:

- audit logging

- alert mail

By implementing these mechanisms, data management can be significantly enhanced in Matillion. We would have a Snowflake Table updates for each of the source and job run and at the same time we will receive an email from Send Mail Component made and implemented on each workflows job run.

Strengthening Audit Trail Capabilities

Optimizing Alerting Mechanisms

Best Practices for Matillion Audit Management

Real-time Alert Configuration

Automated Log Analysis

Proactive Monitoring Solutions

Audit Logging

To begin implementing audit logging, we need to create an orchestration job specifically for this purpose.

With every job execution, Matillion captures essential records, including start-time, end-time, duration, status, message, job ID, job name, and row count. To populate an audit table with a single record, a generic Orchestration Job is added to the orchestration flow.

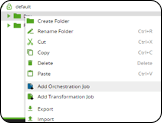

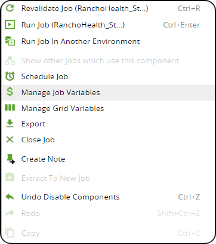

1. Right-click on the project and choose “Add Orchestration Job” from the drop-down menu. This will allow us to create a dedicated job for auditing.

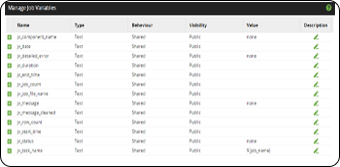

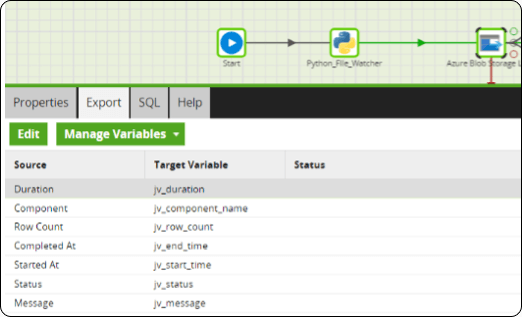

2. The job variables play a huge role as they will store the value temporarily and must be defined to temporarily store the job results. Each orchestration component features an export tab where metadata is stored using variables. Additionally, we can map values to existing variables. Key attributes shared among all components include: Started At, Completed At, Duration, Error Message, Status Row Count Component. While default values are assigned, it is important to export these variables before loading them to ensure their visibility in the audit table.

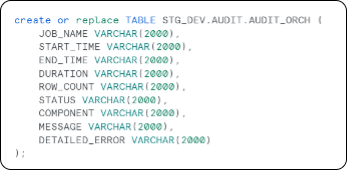

3. To capture the values stored in the variables above, a table needs to be created within Snowflake. This table will serve as a repository for accessing variable values from the Audit_Orch job.

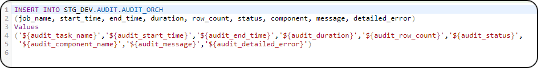

4. In the Audit component creation job, the variables are dynamically replaced by the values in the SQL Script Component in job.

5. Ensure that all job variables, including those required for the Send Email Alert Mechanism, are effectively managed, and added to the Audit job for audit logging to function seamlessly further when we use the same job and shared component of email.

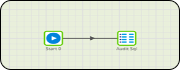

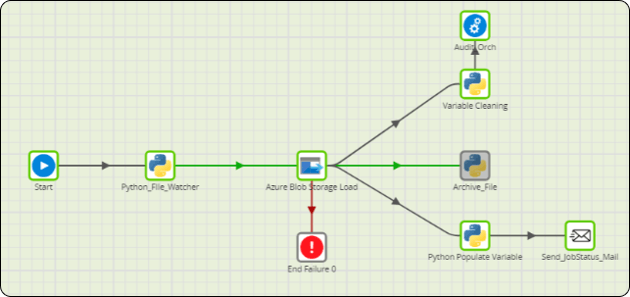

6. Once the variables and job configurations are set, attach the Audit_Orch Job to the respective Job as an orchestration component for auditing purposes.

This ensures that the Audit Table in Snowflake reflects the necessary information for each job and each run.

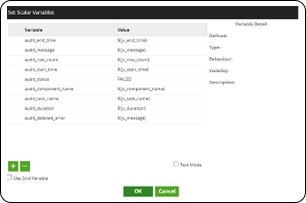

7. Assign scalar variables to the metadata variables to fetch audit details of a job in each pipeline. You want to capture audit information.

With the above steps completed, the audit logging part is now fully functional. However merely keeping logs is not sufficient to effectively utilize logging. To expedite the review process, it is equally important to implement an email alert mechanism that notifies users about the success or failure of the job.

Alert Mail Mechanism (Send Mail)

Implementing an alert email mechanism can greatly enhance the data management process. Especially in scenarios where multiple Master jobs are running bunch of pipelines concurrently.

By sending out email notifications, it becomes easier to identify and debug failed jobs, streamlining the overall workflow. Suppose some Orchestration Jobs may fail. Through email notifications, identifying the failed job becomes effortless, enabling prompt action and efficient troubleshooting.

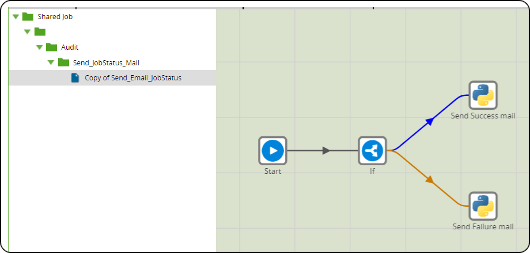

With Matillion’s excellent feature called Shared Job – create your own Workflow/Job as a connector – we can create a shared job called Send Mail Alert. This eliminates the need to create multiple similar jobs, saving both time and effort by simply using it as a connector among pipelines.

Integrating the corresponding receipts’ s email address into the component ensure that emails are delivered promptly.

Send_JobStatus_Mail (Job Name) is using python script components with simple connectivity to email server SMTP etc and using the Matillion’s Status automatic job variable as a condition to send mail, both in success and failure.

Easily drag the Mail shared job onto the canvas to attain job status information.

And that’s it! We have created a shared job named Send_JobStatus_Mail essentially an Alert Mechanism and created an Audit and Logging Mechanism and attaching these (use as any Matillion component) two to any job workflow and it will do the task and handle the key issue of Logging and Alerting in ELT and data Infrastructure.

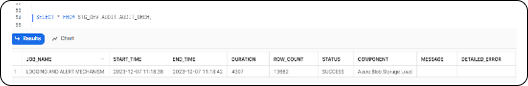

Run the job and verify the logs in the Audit_orch table created in Snowflake. Once the job is completed, the intended users will receive email notifications, resulting in proactive and streamlined processes.

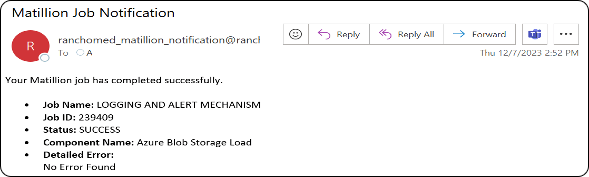

Let us test it live and run a Matillion Job/Workflow:

Here it is, we can see the Audit Table populated with job details along with an email received from a service email address used in Matillion component as we ran a job. You always can go more granular in terms of audit data captured for logging and auditing and in mail.

This is a quite common scenario and a use case faced commonly in data engineering environments and we’ve just demonstrated how you can do it using Matillion and Snowflake.

Media Coverage

Media Coverage Press Release

Press Release